Precision defines the world’s most regulated industries

In life sciences, aerospace and defense, and automotive engineering, the margin for error is measured in microns, and the cost of a mistake can span millions. Every design decision, process improvement, or regulatory update must be traceable, auditable, and compliant.

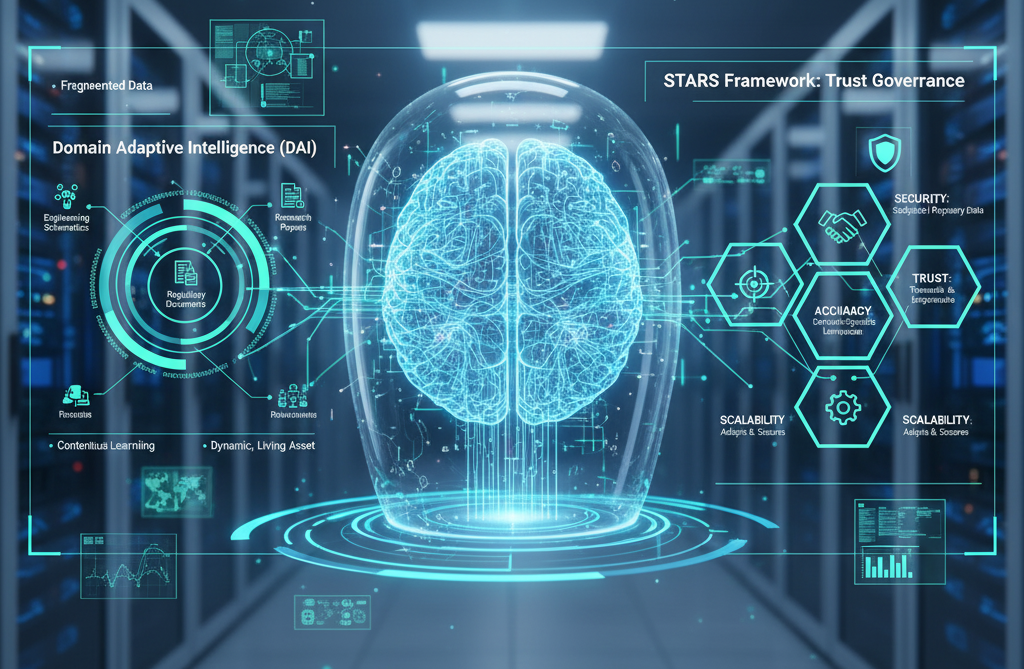

Artificial intelligence has the potential to accelerate this work dramatically, streamlining documentation, connecting knowledge, and enabling faster, data-driven innovation. But in these industries, AI cannot simply act. It must assist.

Where much of the AI community races toward autonomous “agents,” systems that perceive, decide, and act independently, the regulated world demands something different: AI that enhances human expertise, not replaces it.

The future belongs to AI assistants in regulated industries, bounded systems built for human-in-the-loop collaboration, transparency, and control.

From Agency to Assistance

AI research increasingly explores “agents”: systems designed to pursue goals through autonomous reasoning and action. While this model holds promise in consumer or low-risk domains, autonomy becomes a liability when safety, quality, and compliance are non-negotiable.

By contrast, AI assistants in regulated industries are built with bounded agency, clear operational limits that ensure human oversight at every critical juncture. They can automate data discovery, support design documentation, and synthesize complex regulatory knowledge, but they never make final decisions. That authority remains with the experts who carry accountability.

This distinction is not philosophical, it is practical.

In regulated environments, trust is earned through traceability. Every insight, recommendation, and draft must be explainable, verifiable, and auditable.

1. Human-in-the-Loop Control: Precision with Oversight

In industries where compliance determines market access, human oversight is not optional. It is a mandate.

AI assistants in regulated industries shine precisely because they operate under expert supervision.

They perform the heavy lifting, scanning thousands of pages of research papers, engineering reports, and regulatory guidance to find the data that matters. Yet every output is reviewed, interpreted, and validated by a qualified professional.

In life sciences, an AI assistant can sift through tens of thousands of pages of clinical trial data to identify relevant findings or regulatory precedents, but the scientist still ensures the interpretation aligns with FDA or EMA standards.

In aerospace and defense, assistants can assemble preliminary safety documentation, draft technical manuals, or cross-reference historical certifications, but final sign-off remains with licensed engineers and regulatory officers.

This model preserves accountability under frameworks such as FAA regulations, Good Manufacturing Practice (GMP), and ISO 9001, where every action must map to a human decision.

2. Bounded Agency: Safe Acceleration, Not Autonomy

The allure of autonomous AI agents lies in their promise of speed and independence. But in compliance-driven environments, unbounded autonomy is risk magnified.

AI assistants, by design, operate within clearly defined limits. They execute structured tasks, suggest options, and highlight risks, but stop short of taking unilateral action.

Consider the automotive industry, where the development of advanced driver-assistance systems (ADAS) is heavily regulated. An AI assistant can navigate massive repositories of design files, test data, and regulatory filings to identify references to specific safety protocols. It can even draft the first version of a compliance report. Yet the final review, contextual judgment, and approval remain solely with the human engineer or compliance officer.

This model reduces the risk of non-compliance or costly misinterpretations while allowing teams to work exponentially faster.

It mirrors how engineering itself functions, through rigor, iteration, and controlled creativity.

3. Transparency and Auditability: The Foundation of Trust

Trust in AI cannot be assumed. It must be demonstrated.

In regulated industries, every recommendation must be explainable, every data source traceable, and every output auditable. AI assistants make this possible by logging every step of their reasoning process: what documents were consulted, which standards informed a recommendation, and how conclusions were derived.

For instance, when an AI assistant reviews a repository of safety certifications to answer a query, it records exactly which documents were accessed, what terms were matched, and how the final summary was generated. This creates a complete audit trail that regulators and quality teams can verify.

Such transparency is vital when demonstrating compliance with frameworks like ITAR in aerospace or GMP in life sciences. It is the difference between AI that is useful and AI that is trustworthy.

A parallel can be drawn to a common real-world challenge.

When a regulatory body updates the term “software verification” to “software validation,” every instance of the former, across test plans, procedures, and documentation, must be found, reviewed, and updated.

An AI assistant can trace these dependencies instantly, flag impacted records, and generate an audit log of every change. The process remains transparent, reviewable, and compliant, something a fully autonomous agent could not guarantee.

Empowering Regulated Industries: The Enginius.ai Model

Enginius.ai is leading this shift toward responsible, compliant AI adoption.

The Enginius.ai platform is purpose-built for industries where precision and accountability are inseparable from innovation.

Our AI assistants (Nova AI & Scribe AI) can:

- Search across tens of thousands of documents to locate relevant standards, prior reviews, and regulatory references in seconds.

- Draft technical documentation, compliance reports, or safety analyses automatically, while maintaining full traceability.

- Facilitate internal and external review cycles with AI-driven recommendations that enhance clarity and completeness.

At every step, human experts remain in control. The system is engineered so that every action taken by the AI is logged, explainable, and reversible.

This ensures compliance is never sacrificed for speed, and innovation never stalls under the weight of documentation.

Why the Human-in-the-Loop Model Wins?

In environments where liability and ethics intertwine, full autonomy introduces unacceptable uncertainty.

AI assistants, grounded in human-in-the-loop design, offer a sustainable path forward, one that merges the efficiency of automation with the assurance of accountability.

This model safeguards organizations from regulatory and reputational risk while unlocking new productivity frontiers. It empowers engineers, scientists, and compliance officers to focus on high-value problem-solving instead of repetitive document handling or data retrieval.

Ultimately, AI in regulated industries must operate not as a decision-maker but as a decision accelerator.

Conclusion: The Future Is Collaborative Intelligence

The future of AI in regulated industries is not defined by machines acting alone but by collaboration between intelligent systems and expert humans.

AI assistants represent this next evolution, augmenting human judgment with computational depth, ensuring compliance with adaptive intelligence, and transforming how engineering knowledge is created, connected, and verified.

In life sciences, aerospace and defense, and automotive manufacturing alike, progress will depend on how well we integrate AI into the regulatory fabric of our industries.

By embracing assistants, not agents, we ensure that innovation remains fast, compliant, and accountable.

The question is no longer whether AI belongs in regulated engineering, but how responsibly it will be designed to serve it.

The answer lies in AI assistants, precise, explainable, and always human-guided.